|

Previously, I was a Master's student in Robotics in the Robotics Institute at Carnegie Mellon University, advised by Prof. Sebastian Scherer as a member of the AirLab and the Field Robotics Center. Before that, I studied electrical engineering at Tufts Univesity, where I recieved my BS. Email / CV (Oct. 2025) / Google Scholar / Twitter / Github |

|

|

I am interested in reinforcement learning and representation learning for fast adaptation and generalization in messy environments. My ultimate goal is to develop robots that adapt fast during deployment, probe when uncertain, and make mistakes only once, if at all. |

|

Mateo Guaman Castro, Sidharth Rajagopal, Daniel Gorbatov, Matthew Schmittle, Rohan Baijal, Octi Zhang, Rosario Scalise, Sidharth Talia, Emma Romig, Celso de Melo, Byron Boots, Abhishek Gupta, Submitted to International Conference on Robotics and Automation (ICRA), 2026 Oral at the CoRL 2025 Workshop on Generalist Policies in the Wild & Robo-Arena Challenge pdf / project page VAMOS is a hierarchical vision-language-action model that decouples semantic planning from embodiment grounding, enabling robust cross-embodiment navigation with natural language steerability. |

|

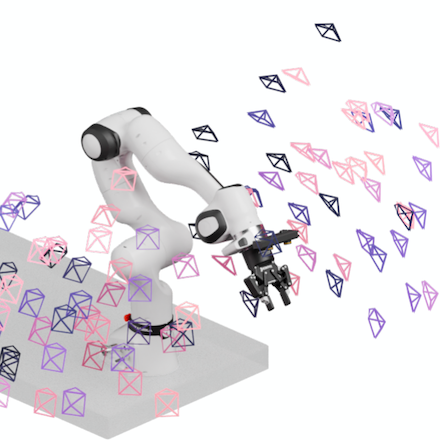

Matthew Schmittle, Rohan Baijal, Nathan Hatch, Rosario Scalise, Mateo Guaman Castro Sidharth Talia, Khimya Khetarpal, Siddhartha Srinivasa, Byron Boots, Conference on Robot Learning (CoRL), 2025 Best Paper Award, RSS 2025 Workshop on Resilient Off-road Autonomous Robotics arXiv / pdf / project page Long Range Navigator enables robots to look beyond local maps through affordances in image space. |

|

Yuxiang Yang, Guanya Shi, Changyi Lin, Xiangyun Meng, Rosario Scalise, Mateo Guaman Castro, Wenhao Yu, Tingnan Zhang, Ding Zhao, Jie Tan, Byron Boots International Conference on Robotics and Automation (ICRA), 2025 arXiv / pdf / project page We developed a system that enables quadrupedal robots to perform continuous, precise jumps across challenging terrains like stairs and stepping stones, achieving unprecedented agility. |

|

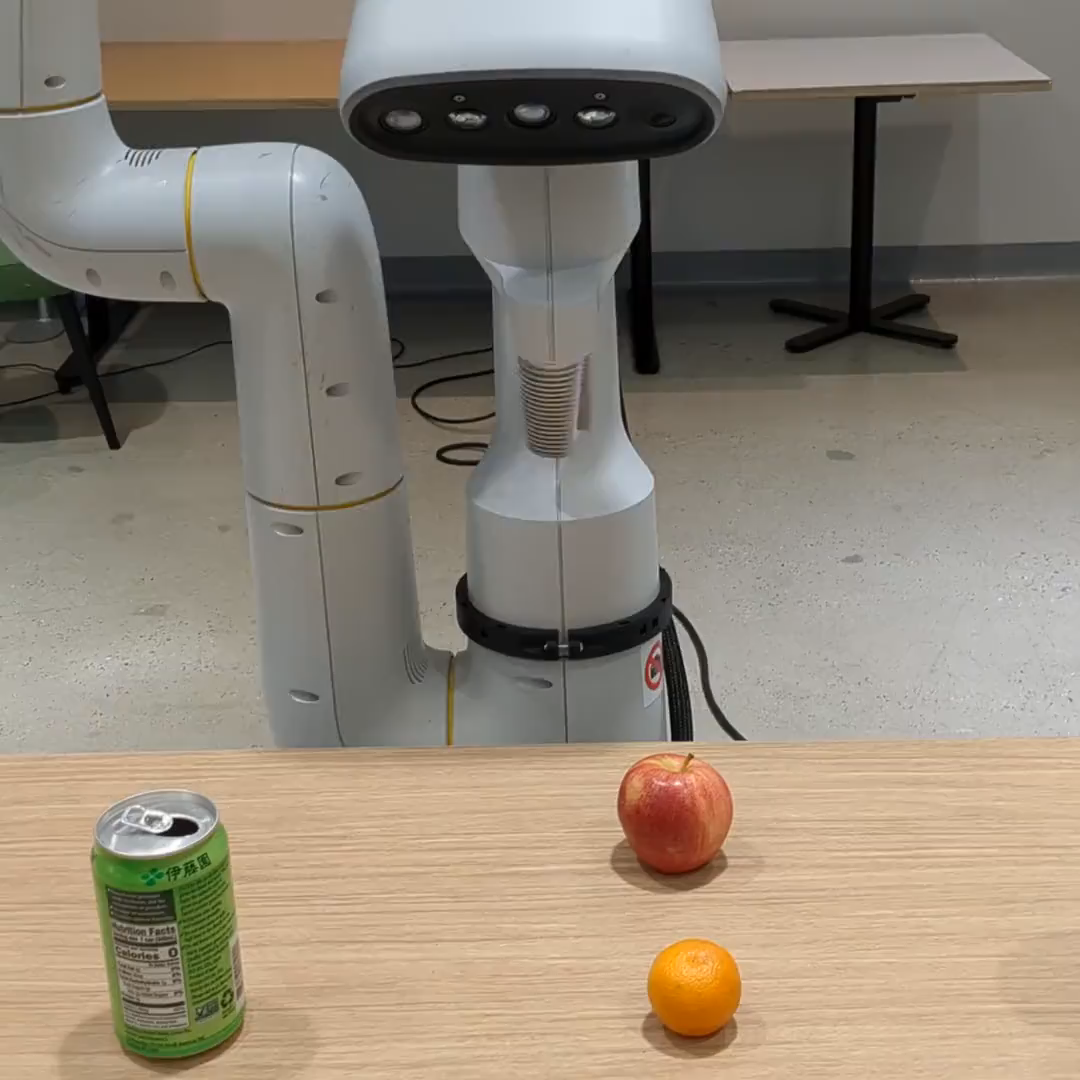

DROID Dataset Team Robotics: Science and Systems (RSS), 2024 arXiv / pdf / project page We present a large dataset for robot learning. |

|

Open X-Embodiment Collaboration International Conference on Robotics and Automation (ICRA), 2024 IEEE ICRA Best Conference Paper Award arXiv / pdf / project page We present a large dataset for robot learning. |

|

Matthew Sivaprakasam, Parv Maheshwari, Mateo Guaman Castro, Samuel Triest, Micah Nye, Steven Willits, Andrew Saba, Wenshan Wang, Sebastian Scherer International Conference on Robotics and Automation (ICRA), 2024 arXiv / pdf / IEEE / project page / video We present a dataset for off-road driving with multiple modalities at high speed. |

|

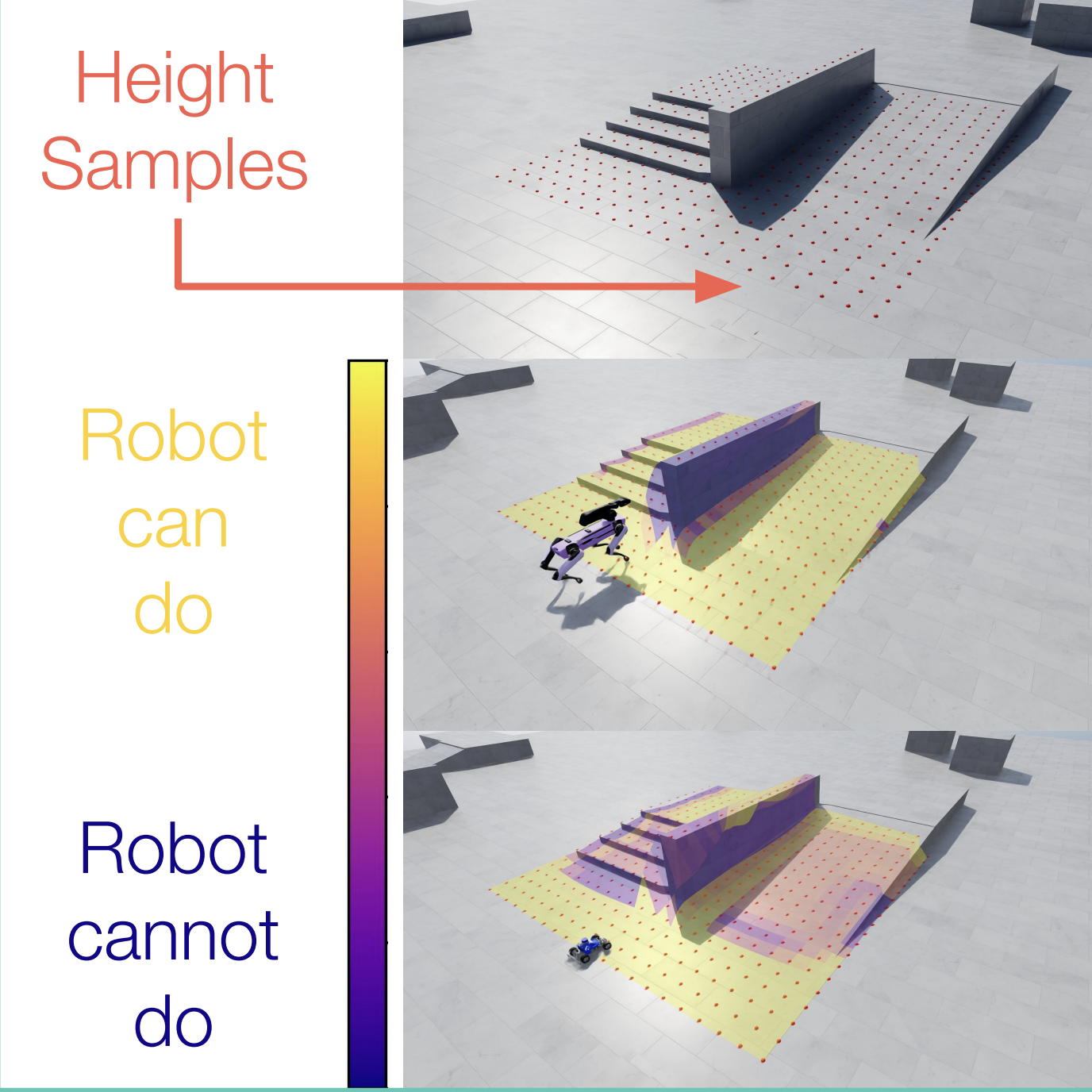

Mateo Guaman Castro, Samuel Triest, Wenshan Wang, Jason M. Gregory, Felix Sanchez, John G. Rogers III, Sebastian Scherer International Conference on Robotics and Automation (ICRA), 2023 arXiv / pdf / IEEE / project page / short video / long video We propose a self-supervised method to predict traversability costmaps by combining exteroceptive environmental information with proprioceptive terrain interaction feedback. |

|

Samuel Triest, Mateo Guaman Castro, Parv Maheshwari, Matthew Sivaprakasam, Wenshan Wang, Sebastian Scherer International Conference on Robotics and Automation (ICRA), 2023 arXiv / pdf / IEEE We present an inverse reinforcement learning-based method that efficiently predicts uncertainty-aware costmaps for off-road traversability via conditional value-at-risk (CVaR). |

|

Matthew Sivaprakasam, Samuel Triest, Mateo Guaman Castro, Micah Nye, Mukhtar Maulimov, Cherie Ho, Parv Maheshwari, Wenshan Wang, Sebastian Scherer Workshop on Pretraining for Robotics (PT4R), International Conference on Robotics and Automation (ICRA), 2023 In this work we discuss the improvements to our previous dataset, TartanDrive. |

|

Evana Gizzi, Wo Wei Lin, Mateo Guaman Castro, Ethan Harvey, Jivko Sinapov International Conference on Computational Creativity (ICCC), 2022 In this work, we investigate methods for life-long creative problem solving (LLCPS), with the goal of increasing CPS capability over time. |

|

Faizan Muhammad, Vasanth Sarathy, Gyan Tatiya, Shivam Goel, Saurav Gyawali, Mateo Guaman Castro, Jivko Sinapov, Matthias Scheutz International Conference on Autonomous Agents and Multiagent Systems (AAMAS), 2021 We present a formal framework and implementation in a cognitive agent for novelty handling and demonstrate the efficacy of the proposed methods for detecting and handling a large set of novelties in a crafting task in a simulated environment. |

|

Evana Gizzi, Mateo Guaman Castro, Wo Wei Lin, Jivko Sinapov Workshop on Declarative and Neurosymbolic Representations in Robot Learning and Control, Robotics: Science and Systems (RSS), 2021 We introduce a unified framework for creative problem solving through action discovery. We describe two methods which enable action discovery at a declarative and neurosymbolic level, namely through action primitive segmentation, and behavior babbling, respectively. |

|

Evana Gizzi, Mateo Guaman Castro, Jivko Sinapov International Conference on Development and Learning (ICDL), 2019 pdf / IEEE We describe a method for discovering new action primitives through object exploration and action segmentation, which is able to iteratively update the robot's knowledge base on-the-fly until the solution becomes feasible. |

|

| Website template from Jon Barron. |